| View previous topic :: View next topic |

| Author |

Message |

WayneBearCal

Joined: 09 Apr 2014

Posts: 33

Back to top |

Posted: 01/22/15 5:03 pm ::: The Field of 64: Does Princeton Deserve a #2 Seed? Posted: 01/22/15 5:03 pm ::: The Field of 64: Does Princeton Deserve a #2 Seed? |

Reply  |

|

Most if not all of the preliminary WBB brackets that you see are attempts to predict what the selection committee will do. This is an attempt to objectively determine what the bracket SHOULD look like.

As I mentioned before in a previous thread, I've been creating brackets for many years. Initially I picked the bracket as I saw fit but in recent years, I tried to predict the committee's actions in an attempt to outdo ESPN (I was always better at deciphering the bubble and picking the field of 64, but Creme was always better at predicting seeding lines). Since ESPN's predictions pretty much converged with mine last season, I'm going back to my original idea of picking a proper bracket rather than mimicking the committee.

Here's my methodology, and keep in mind that it is willfully data-based without my own personal opinions on the effect of recent injuries or team trends. RPI is clearly NOT the best way to either rank teams or to define strength of schedule (and certainly no one could believe that arbitrary dividing lines such as top 25, top 50 and top 100 should be the basis for defining good wins and bad losses). More nuanced and time-tested methods which take point-spread into account such as the Sagarin and Massey ratings form a more rational basis for picking a proper field. Nevertheless, as an NCAA-sanctioned methodology, RPI should be factored in as well.

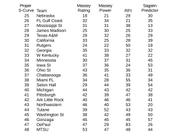

I assigned equal weight (25%) to each of these four factors: RPI, the Sagarin Predictor (the second rightmost column of his ratings), the Massey Rating (which measures performance to-date) and the Massey Power (more relevant to predicting future games). I essentially double-weighted Massey based on finding his ratings to be mostly better at predicting WBB results than Sagarin. For each factor, I calculated a 0 to 100 rating, assigning a 100 to the strongest Division I team and 0 to the weakest. Intermediate ratings were based NOT on positional ranking but relative strength (for example, UConn was so far ahead in the Massey Rating numbers that while they earned a 100, 2nd and 3rd-best South Carolina and Notre Dame each only earned an 87). The NCAA only provides the positional rankings on RPI, so I used the actual numerical RPI data on WarrenNolan.com (which has historically been more accurate than RealTimeRPI or WBBState).

My first rankings are based on all games thru 1/20. The teams are listed in S-Curve order, though I only show the seeding line. The ESPN seedings (based on Creme's bracketology for games thru 1/18) are shown in parentheses for comparison. (Note that these are ESPN's seedings BEFORE any procedural bumps to better reflect his original S-Curve.)

1 (1) Connecticut

1 (1) Notre Dame

1 (2) Baylor

1 (1) South Carolina

2 (2) Maryland

2 (1) Tennessee

2 (7) Princeton

2 (2) Louisville

3 (3) Arizona St

3 (2) Oregon St

3 (4) Florida St

3 (4) Duke

4 (3) North Carolina

4 (3) Texas

4 (4) Kentucky

4 (6) UW Green Bay

5 (5) Iowa

5 (9) South Florida

5 (5) George Washington

5 (6) Washington

6 (10) W Kentucky

6 (8) Oklahoma

6 (5) Stanford

6 (4) Minnesota

7 (6) Nebraska

7 (3) Texas A&M

7 (7) James Madison

7 (11) Syracuse

8 (5) Mississippi St

8 (9) Dayton

8 (13) FL Gulf Coast

8 (7) Pittsburgh

9 (10) Rutgers

9 (6) Georgia

9 (11) Northwestern

9 (8) Miami FL

10 (10) Ohio St

10 (8) California

10 (9) Seton Hall

10 (11) Tulane

11 (11) Chattanooga

11 (7) Michigan

11 (9) Iowa St

11 (10) Washington St

12 (13) Ark Little Rock

12 (--) Arkansas (in ESPN's first four out)

12 (--) DePaul (in ESPN's second four out)

12 (12) Gonzaga

13 (13) Quinnipiac

13 (13) Wichita St

13 (12) Long Beach St

13 (12) Fresno St

14 (14) Ohio (ESPN selected Akron in the MAC)

14 (15) Albany NY

14 (14) S Dakota St (ESPN selected South Dakota in the Summit)

14 (14) American Univ

15 (14) Hampton

15 (15) Liberty

15 (15) E Washington (ESPN selected Sacramento State in the Big Sky)

15 (16) TN Martin

16 (15) Bryant (ESPN selected Central Connecticut St in the Northeast)

16 (16) CS Bakersfield (ESPN selected UT Pan American in the WAC)

16 (16) SF Austin (ESPN selected Lamar in the Southland)

16 (16) TX Southern

First Nine Out:

(--) (12) West Virginia

(--) (--) Oklahoma St (in ESPN's first four out)

(--) (--) Vanderbilt (not listed by ESPN)

(--) (--) NC State (in ESPN's first four out)

(--) (--) Purdue (not listed by ESPN)

(--) (--) MTSU (not listed by ESPN)

(--) (--) Stetson (not listed by ESPN)

(--) (--) Illinois (not listed by ESPN)

(--) (8) St. John's

Multiple-bid conferences:

8 ACC

8 Big Ten

7 SEC

6 Pac 12

4 Big 12

3 American

2 Atlantic 10

2 Big East

Despite some glaring disparities, the ESPN bracket actually comes closer to my proper bracket than it did the last time I did this. It's easy to figure out why - that was several years ago and before the NCAA women's RPI (and committee) properly factored in home vs. road performance. And I'm not troubled by the discrepancies within conferences, since Creme used conference standings as of 1/18 (despite wildly unbalanced schedules to-date) whereas I relied on power ratings that presumably give a better forecast as to how the conference races will eventually play out.

But the blatant bias vs. mid-majors still exists, highlighted by Princeton but also reflected in the lower ESPN seedings for UW Green Bay, Florida Gulf Coast, Western Kentucky and perhaps former mid-major South Florida. (Of course, this isn't ESPN's bias but rather a good guess as to what the committee would do.) I'm very aware of all the rationalizations for giving lower seeds to mid-majors but what it essentially comes down to is using RPI-based data to claim a lack of sufficient evidence, which is then used as the justification for a presumptively negative decision.

I'm also aware of the "eye test" arguments. I'm sure I've seen as broad a variety of games as anyone so I'll offer my opinion specifically on Princeton. To me it's possible to hazard a guess as to where the Tigers would finish if they played in a power conference or in one of the stronger mid-major conferences. I won't specify the positions lest it set off a round of chest-beating by advocates for various teams and conferences, and obviously their style of play would be better suited for some conferences than others. But I will say that my guesses would be very consistent not with a 2 seed but rather a 4 or 5 seeding. Even accounting for perceptual basis, I would guess their true standing with the committee right now is perhaps a 6 seed, or even a 5. Should Princeton finish undefeated, I think the committee would be forced to rethink an institutional history of citing a lack of evidence to justify a negative finding, and even without this soul-searching the headline effect of an unblemished record would probably boost them up at least one seeding line. Personally, I would love to see the Tigers go undefeated just to see what the committee would do.

_________________

AKA WayneBear at BearInsider.com

Avatar: Paige Bowie, Jennie Leander and Lauren Ashbaugh celebrate after Cal's upset of #9 Colorado State on December 21, 1998

|

|

myrtle

Joined: 02 May 2008

Posts: 32335

Back to top |

Posted: 01/22/15 6:55 pm ::: Posted: 01/22/15 6:55 pm ::: |

Reply  |

|

| Princeton: wins against Michigan and Pittsburgh and nobody else of any weight whatsoever could/should never equal a #2 seed.

_________________

For there is always light,

if only we’re brave enough to see it.

If only we’re brave enough to be it.

- Amanda Gorman

|

|

GlennMacGrady

Joined: 03 Jan 2005

Posts: 8227

Location: Heisenberg

Back to top |

Posted: 01/24/15 10:33 pm ::: Posted: 01/24/15 10:33 pm ::: |

Reply  |

|

Impressive research, data collection and work effort. Thanks.

I'm never much interested in rankings, seedings or brackets, but the methodology seems very reasonable.

As to Princeton, if a reasonable methodology gives them a higher seed than the subjective eyeball test--or, far more likely, the absence of any eyeball test--I say, so be it. If a team goes undefeated in a particular year, why shouldn't they be rewarded in seedings for that year. To me, the lack of tournament success in earlier years should be irrelevant. |

|

ArtBest23

Joined: 02 Jul 2013

Posts: 14550

Back to top |

Posted: 01/25/15 12:30 am ::: Posted: 01/25/15 12:30 am ::: |

Reply  |

|

| GlennMacGrady wrote: |

Impressive research, data collection and work effort. Thanks.

I'm never much interested in rankings, seedings or brackets, but the methodology seems very reasonable.

As to Princeton, if a reasonable methodology gives them a higher seed than the subjective eyeball test--or, far more likely, the absence of any eyeball test--I say, so be it. If a team goes undefeated in a particular year, why shouldn't they be rewarded in seedings for that year. To me, the lack of tournament success in earlier years should be irrelevant. |

Just wondering if you believe that "if a reasonable methodology gives [someone] a [lower] seed than the subjective eyeball test", should that be met with a "so be it" as well?

|

|

YourCrimsonNightmare

Joined: 12 Dec 2006

Posts: 269

Back to top |

Posted: 01/25/15 2:22 am ::: Posted: 01/25/15 2:22 am ::: |

Reply  |

|

All I want to know is when does the next game start.

7-0 in the only NCAA conference without a team with a losing record.

|

|

GlennMacGrady

Joined: 03 Jan 2005

Posts: 8227

Location: Heisenberg

Back to top |

Posted: 01/25/15 2:49 am ::: Posted: 01/25/15 2:49 am ::: |

Reply  |

|

| ArtBest23 wrote: |

| GlennMacGrady wrote: |

Impressive research, data collection and work effort. Thanks.

I'm never much interested in rankings, seedings or brackets, but the methodology seems very reasonable.

As to Princeton, if a reasonable methodology gives them a higher seed than the subjective eyeball test--or, far more likely, the absence of any eyeball test--I say, so be it. If a team goes undefeated in a particular year, why shouldn't they be rewarded in seedings for that year. To me, the lack of tournament success in earlier years should be irrelevant. |

Just wondering if you believe that "if a reasonable methodology gives [someone] a [lower] seed than the subjective eyeball test", should that be met with a "so be it" as well? |

Yes, of course. And I think there can be several reasonable methodologies, all of which could result in different seedings. Again, I personally don't really care who gets seeded where or where they play as long as there is some modicum of reason. (I realize other people do care.) A reasonable and consistent methodology matters most to me at the lower boundary, where some teams will get in or out. |

|

WayneBearCal

Joined: 09 Apr 2014

Posts: 33

Back to top |

Posted: 01/27/15 11:58 am ::: Posted: 01/27/15 11:58 am ::: |

Reply  |

|

| GlennMacGrady wrote: |

Impressive research, data collection and work effort. Thanks.

I'm never much interested in rankings, seedings or brackets, but the methodology seems very reasonable.

As to Princeton, if a reasonable methodology gives them a higher seed than the subjective eyeball test--or, far more likely, the absence of any eyeball test--I say, so be it. If a team goes undefeated in a particular year, why shouldn't they be rewarded in seedings for that year. To me, the lack of tournament success in earlier years should be irrelevant. |

Thank you. Since I hadn't done this in years, I just threw the data together and provided the "headline" number (i.e. the final seeding result). I ran the numbers again for games thru 1/25 (to match the timeframe for ESPN's latest bracketology) and this time I'll provide a little more data that casts a further negative light on a system that relies heavily on RPI-based data.

Here are tables (just click on each thumbnail) that present not just my "proper" S-Curve rating thru 1/25 but also each team's positional ranking in each of the 4 rating factors. I use the relative 0 to 100 ratings described above instead of positional rankings (hence you can't simply average the 4 positional rankings to come up with the overall ranking), but positional rankings are easier to eyeball. Now, many people on this board try to follow all the conferences and not just their own team, so I ask them: Which ranking best matches your own perception (eye test) of the top teams? Pay special attention to those teams whose rankings vary a lot from rating to rating (e.g. where there's at least a 5-position difference between their highest and lowest ranking).

Everyone can draw their own conclusions but to me the Massey ratings are clearly the most accurate, and as expected the Massey Rating seems to be a better reflection of overall to-date performance whereas Massey Power better reflects current and presumably future trends. Sagarin seems 3rd best and RPI comes in last, with some very inconsistent and misleading rankings that sadly form the basis of what little bracket analysis exists in WBB. I won't bother to compare the rankings to ESPN's bracketology this week because the conclusions are the same as last week: the same systematic bias against mid-majors, and the same mid-season ESPN goofiness in relying on to-date conference standings.

Here are a couple of more thoughts. People may wonder how this systematic bias against mid-majors could have developed. Yes, there's the bias in favor of bigger schools due to influence and revenue considerations in the major sports, and that mindset bleeds thru to the minor sports. But I think there's another bias at work even among people (e.g. committee members) who try to be as objective as possible, and it's actually a bias in FAVOR of power conference teams.

When conference play begins in January, we tend to think that power conference teams have the harder task because they play the more challenging teams. But in terms of RPI and traditional committee thinking, their task is actually made much easier simply by playing (and not necessarily winning over) better teams. It's a formula that is extremely forgiving when it comes to losing repeatedly in power conference play and gives a team numerous chances to eventually earn the needed "quality" wins of the top 25 and top 50 variety. Consistency in performance may be demanded of the very top seeds but not anyone else in the power conferences.

But the bar is set ridiculously high for the top mid-majors even after they proved their worth in non-conference play. We think that they should be able to easily run the table in a weaker conference, and a single loss is punished disproportionately. This mindset greatly underestimates the difficulty (and statistical improbability) of winning a long series of games over teams that you "should" beat. Advocates for power conference teams can say that their team would easily go undefeated if they played in the weaker Ivy or Horizon leagues, but imagine if they actually had to do so. They would come into league play with a big target on their backs (just like Princeton and Green Bay), have to play consistently in every game (unlike the middle-seeded power conference teams who are forgiven for having a couple of spectacularly bad conference losses), overcome those single games where an opponent shoots lights out or where that night's set of refs behaves strangely, and play under the continuous pressure of an all-risk, no-reward scenario (no additional credit for winning, but a huge penalty for losing just once). Statistics will tell you that even if you are a vastly superior conference team with a 95% chance of winning each game, your odds are actually LESS than 50-50 of going undefeated over a 16-game conference schedule.

Along those lines, the Massey website provides a terrific resource for not only viewing the probable outcome of today's (and future) games, but also allows you to create any matchup you wish and then view the statistical probabilities. For example, in a moment of chest-beating I might declare how easy it would be for Cal to run the table in the Horizon League. But if you actually start entering the Cal vs. Horizon matchups on the Massey site you will get a much more sobering picture, one that reinforces just how much safer it is to play in a power conference. (That same exercise will also tell you how few teams in the nation could have matched Princeton's 17-0 non-conference record, irrespective of any RPI-based dismissal of their "easy" schedule.) Just go to the Massey website and click on the Predictions and Matchup links at the top of the page:

http://masseyratings.com/rate.php?s=cbw&sub=NCAA%20I

_________________

AKA WayneBear at BearInsider.com

Avatar: Paige Bowie, Jennie Leander and Lauren Ashbaugh celebrate after Cal's upset of #9 Colorado State on December 21, 1998

Last edited by WayneBearCal on 01/27/15 12:40 pm; edited 1 time in total |

|

ArtBest23

Joined: 02 Jul 2013

Posts: 14550

Back to top |

Posted: 01/27/15 12:34 pm ::: Posted: 01/27/15 12:34 pm ::: |

Reply  |

|

Two questions. Why do you think Massey is "clearly the most accurate"?

And who are the mid majors who you feel "proved their worth in non-conference play" and what do you mean by that?

|

|

ArtBest23

Joined: 02 Jul 2013

Posts: 14550

Back to top |

Posted: 01/27/15 12:50 pm ::: Posted: 01/27/15 12:50 pm ::: |

Reply  |

|

| GlennMacGrady wrote: |

| ArtBest23 wrote: |

| GlennMacGrady wrote: |

Impressive research, data collection and work effort. Thanks.

I'm never much interested in rankings, seedings or brackets, but the methodology seems very reasonable.

As to Princeton, if a reasonable methodology gives them a higher seed than the subjective eyeball test--or, far more likely, the absence of any eyeball test--I say, so be it. If a team goes undefeated in a particular year, why shouldn't they be rewarded in seedings for that year. To me, the lack of tournament success in earlier years should be irrelevant. |

Just wondering if you believe that "if a reasonable methodology gives [someone] a [lower] seed than the subjective eyeball test", should that be met with a "so be it" as well? |

Yes, of course. And I think there can be several reasonable methodologies, all of which could result in different seedings. Again, I personally don't really care who gets seeded where or where they play as long as there is some modicum of reason. (I realize other people do care.) A reasonable and consistent methodology matters most to me at the lower boundary, where some teams will get in or out. |

It certainly matters a lot to the final few teams at the margin whether they get in or are left out but that has no impact on the outcome or integrity of the tournament. Those last few teams are inevitably going to lose their first game against a 1 or 2 seed anyhow, and I consider naming a champion, not the experience of participating for number 64, to be the primary purpose of the tournament. The integrity of the championship matters more to me and thus I am more concerned with the top half where decisions on seeding, matchups, and home court advantage between competitive teams can effect the outcome of games and, by extension, the identity of the ultimate champion. So that reasonable and consistent methodolgy matters throughout.

|

|

WayneBearCal

Joined: 09 Apr 2014

Posts: 33

Back to top |

Posted: 01/27/15 2:04 pm ::: Posted: 01/27/15 2:04 pm ::: |

Reply  |

|

| ArtBest23 wrote: |

Two questions. Why do you think Massey is "clearly the most accurate"?

And who are the mid majors who you feel "proved their worth in non-conference play" and what do you mean by that? |

I've watched enough games across many conferences (strong and weak) to form my own opinion on where I would rank teams in relation to each other. For each team where there was a large discrepancy between their rankings in the 4 different rating systems, I looked at which system came the closest to my opinion. Sometimes it was Sagarin and fewer times it was RPI, but in the large majority of cases it was one or both of the Massey systems. Keep in mind that I ran the numbers for all Division I teams, so I actually looked well beyond the 64 teams shown on my tables and the verdict was the same - the Massey systems seemed to best reflect what I had seen in the many games that I watched, and RPI seemed the most disconnected from reality.

Of course, each system has its own methodology and biases (for example, each system developer who takes point spread into account has to judge whether or not and how to diminish the impact of blowout wins), and it could be that Massey's biases simply match my own observational bias. But I would also add that I was very skeptical of the Massey ratings before this season. That's when I started to review his prediction page before each day's games, and I became increasingly impressed with how his accurate his system seems to be. Quite a few times I've wondered why a team had so much trouble vs. an opponent that they should have easily beaten, but when I rechecked the Massey page I was then reminded that he had correctly forecast a tight game. I think that same game-by-game accuracy flows thru to his overall team rankings.

Re: my comments on mid-majors, I strongly believe that the non-conference portion of the season is the best time to evaluate mid-majors since they will likely have an easier (but not easy) time in conference play. At the end of non-conference play, I felt that certain mid-majors had earned the same consideration that potential at-large power conference teams receive - that they should not be judged unusually harshly for one or two conference losses in the same way that power conference teams can be forgiven for a couple of blowout losses. As for which mid-majors I'm talking about, just look at my table for any mid-major who is still in the top 40, though of course I would have my own very different opinion on each of those teams.

_________________

AKA WayneBear at BearInsider.com

Avatar: Paige Bowie, Jennie Leander and Lauren Ashbaugh celebrate after Cal's upset of #9 Colorado State on December 21, 1998

|

|

labcoatguy

Joined: 21 Jan 2011

Posts: 58

Back to top |

Posted: 01/31/15 12:55 am ::: Posted: 01/31/15 12:55 am ::: |

Reply  |

|

As a ranker of college women's basketball teams for bracketological purposes myself, I applaud this effort.

Are you defining accuracy to mean whichever methodology delivers a result that you just instinctively feel is right? Or is there some other rationale behind the rankings? I agree, it "feels" like Princeton should achieve higher esteem than a 7 seed for having a perfect season (if they reach that goal), but what I "feel" isn't very meaningful or interesting.

Last year, of the four methodologies you blend into your ratings, and using the Paymon methodology described here, RPI was the best (290 pts), followed by Massey Rating (270 pts), Massey Power (266 pts), and Sagarin Predictor (246 pts). They were ranked 3, 15, 18, and 25th respecively, of the 32 women's basketball ranking methods I evaluated. Both Massey Rating and RPI got 61 of 64 teams correctly into the field, while Massey Power had 59 and Sagarin Predictor had 58.

You asked which ranking system best matches our own perception of the top teams. I can't speak to this, but I can speak to which ranking system best matched the selection committee's perception of the top teams last year. If we restrict the question to only numerical methods that were published prior to selection of the field, and rank based on how many teams were predicted to within one seed of the actual result of seeds (1) to (6), then pilight's field of 64 model (posted on this message board) and Charlie Burrus's rankings tied for first (21 of 24). If we only count exact seeds, then Sagarin's ELO_SCORE model ties for first with my own S-Factor rankings (12/24).

Looking forward to including your ranking system in my annual meta-bracketology analysis! |

|

WayneBearCal

Joined: 09 Apr 2014

Posts: 33

Back to top |

Posted: 02/03/15 2:41 pm ::: Posted: 02/03/15 2:41 pm ::: |

Reply  |

|

ESPN bracketologist Charlie Creme has addressed the "Princeton Predicament" in this excellent article that accurately details the twisted logic of traditional selection-committee thinking:

http://espn.go.com/womens-college-basketball/story/_/id/12266750/are-princeton-tigers-closing-ivy-league-best-ncaa-tournament-seed

Anyway, note that while doing very little over the past month (largely due to a 3-week break), Princeton has moved up in ESPN's bracketology since January 5 from a #10 seed to a #7 and now a #6. No doubt feedback from someone close to the committee helped trigger the first move from 10 to 7, but I think the move is primarily due to the growing realization that traditional committee thinking is going to be severely tested if they have to discuss an undefeated Princeton team. I'm sure some people are hoping that the issue will simply go away (i.e. that the Tigers will lose an Ivy League game).

| labcoatguy wrote: |

As a ranker of college women's basketball teams for bracketological purposes myself, I applaud this effort.

Are you defining accuracy to mean whichever methodology delivers a result that you just instinctively feel is right? Or is there some other rationale behind the rankings? I agree, it "feels" like Princeton should achieve higher esteem than a 7 seed for having a perfect season (if they reach that goal), but what I "feel" isn't very meaningful or interesting ...

Looking forward to including your ranking system in my annual meta-bracketology analysis! |

I couldn't imagine having to describe my methodology in any more detail UNTIL I checked out your very interesting blog. I in turn applaud your effort, which is similar to my own attempts in the past to create a formula to divine the illogical (i.e. predict the bracket).

Anyway, you're obviously a person who would be interested in more detail so here it is. But I have to point out again that this is NOT an attempt to predict the bracket. This is an attempt to create an objective or "proper" bracket. So the test on Selection Monday is not how close I come to the bracket, it's a test of how close the committee comes to the proper bracket. Details:

1. To determine the "proper" bracket, I only wanted to use publicly available rankings that had some extensive history of ranking the all Division I WBB teams. Unless I've overlooked some service, that's RPI, Sagarin and Massey. I didn't want to use WBBState because not everyone has access to that subscription service (and frankly, their "State of WCBB" methodology results in a ranking that is just too far afield to be useful).

2. RPI produces one set of data, a percentage for each team. Sagarin has 2 measures with an extensive history, the Predictor and ELO Score. Massey also has 2 measures, Massay Rating and Massey Power. RPI and Massay Rating assess the entire, season-to-date performance. The Sagarin measures and Massey Power also assess season-to-date performance but emphasize recent trends in an attempt to be more predictive of future games. Both types of measures should be used, because the NCAA has always emphasized the total body of work while also giving credit for how well a team finishes the season. I also felt comfortable using point-spread based data because even though the NCAA's RPI only looks at wins and losses, they have also said that it is necessary to view games to subjectively evaluate teams (the "eye test"). The final point spread does not always accurately reflect the nature of a single game but over a long series of games, point-spread data is a very useful tool in approximating the eye test.

3. There have been many discussions and even a few dubious studies on the men's side about which measure has the best ability to predict games, but these findings are tenuous at best and do not necessarily apply to a women's game that has a very different pattern of distribution of team strength. In the absence of any guideline on how to choose and weight each available factor, I necessarily had to subjectively look at the rankings and numbers produced by each service to judge the merits of each methodology.

4. I have followed the Sagarin ratings for many years and have consistently found that their ELO Score did not serve a distinctive purpose in WBB ratings - it's meant to emphasize winning and losing much more than point-spread, but in fact ends up forming a middle ground between the Sagarin Predictor and RPI. So I dropped ELO Score because it was redundant (i.e. using ELO would in effect duplicate and overweight the Predictor and RPI).

5. That left 4 measures to be assessed: RPI, Sagarin Predictor and the two different Massey rankings. I don't want to state my own conclusions as fact, so again I invite everyone to review the data produced by each service. Now, if you're primarily following one team or one conference, you may have a hard time deciding which service seems the most accurate. But if you're truly a nationwide fan who's followed many conferences and who has followed the season to date very closely, I think you could at least guess what your own personal rankings would be for the top 60 (that would be enough to cover the at-large selection process and the top 12 seeding lines). You might not be able to rattle off who you think should be ranked 51-60, but you could at least look at someone else's ranking and spot what you believe to be anomalous and flat-out wrong decisions. Here are the different links:

http://www.ncaa.com/rankings/basketball-women/d1/ncaa-womens-basketball-rpi

http://www.rpiratings.com/womrate.php

http://masseyratings.com/rate.php?s=cbw&sub=NCAA%20I

6. I've already said that I decided that the Massey ratings were the most accurate, but maybe a bit more explanation will help. My greatest concern is the introduction of anomalous data - the stray ranking from one service that by itself can unfairly pull a team up or down one or more seeding lines. So I judged each rating service by counting its number of anomalies. I kept in mind that 2 services judged season-long performance and 2 focused a bit more on recent performance, so each service was judged on its own timeframe. Of course my evaluation was viewed thru the subjectivity of my own opinion, but I've seen all top 60 teams multiple times so at least it was a somewhat informed opinion.

I have done this exercise multiple times since December and my conclusion has been the same each time: RPI produces BY FAR the most anomalies and Sagarin produces the 3rd most anomalies. Massey Power produced the fewest anomalies, but Massey Rating also produced very few anomalies. (That Massey distinction makes sense - their Power ranking emphasizes recent performance, so they're looking at a smaller universe of games with less likely variation from my own personal opinion). Given this assessment, I felt that I had to overweight the Massey ratings in some manner.

7. So here's the formula I came up with. Since the NCAA wants to judge the entire body of work and yet emphasize how a team finished, I gave an equal 50% weight to measures of season-long performance (RPI and Massey Rating) and to the more predictive measures which factor recent performance (Sagarin Predictor and Massey Power). My own personal preference would be to overweight Massey in each category but I wanted to guard against my own biases and besides, the fact that a Massey rating is used in each category already gives that service its due respect. I hated using the anomaly-ridden RPI, but felt that I had to respect an NCAA-derived methodology.

The most elegant solution was to simply give an equal 25% weighting to all 4 rankings. It had the added advantage of being easy to understand (and hopefully sound acceptable) on a first reading. Within each ranking, I converted their numbers so that the strongest Division I team received a 100 rating and the weakest team a 0 (for RPI, I used the WarrenNolan data) in order to insure that each ranking retained its 25% weighting. I made my final decision on methodology without running any numbers, so I was not trying to game the formula to anyone's advantage. In fact, I was afraid to look at the first results, fearing that the emphasis on point-spread ratings might somehow work to the advantage of certain conferences or more offensive-minded teams. But I was very pleased at the results, though there are always at least of couple of bubble decisions that make me wince. The biggest surprise was how well these objective measures reflected on the lesser known mid-majors beyond Princeton and Green Bay. It will be fascinating to see what happens if some of these mid-major leaders lose in their conference tournament, because some very surprising no-names could finish ahead of the power-conference also-rans for the final bubble spots. So the historical bias against mid-majors will not only be reflected in the committee's seedings but may also in reflected in this season's bubble.

_________________

AKA WayneBear at BearInsider.com

Avatar: Paige Bowie, Jennie Leander and Lauren Ashbaugh celebrate after Cal's upset of #9 Colorado State on December 21, 1998

|

|

beknighted

Joined: 11 Nov 2004

Posts: 11050

Location: Lost in D.C.

Back to top |

Posted: 02/03/15 4:22 pm ::: Posted: 02/03/15 4:22 pm ::: |

Reply  |

|

| WayneBearCal wrote: |

ESPN bracketologist Charlie Creme has addressed the "Princeton Predicament" in this excellent article that accurately details the twisted logic of traditional selection-committee thinking:

http://espn.go.com/womens-college-basketball/story/_/id/12266750/are-princeton-tigers-closing-ivy-league-best-ncaa-tournament-seed

Anyway, note that while doing very little over the past month (largely due to a 3-week break), Princeton has moved up in ESPN's bracketology since January 5 from a #10 seed to a #7 and now a #6. No doubt feedback from someone close to the committee helped trigger the first move from 10 to 7, but I think the move is primarily due to the growing realization that traditional committee thinking is going to be severely tested if they have to discuss an undefeated Princeton team. I'm sure some people are hoping that the issue will simply go away (i.e. that the Tigers will lose an Ivy League game). |

Or Charlie has no idea what he's doing, always a possibility.

Honestly, I don't see why the committee would have that much trouble with an undefeated Princeton team (and, mind you, I'm hoping they do go undefeated). They've looked at teams in less-competitive conferences hundreds of times and seeded them using the same criteria they always use. Princeton will have 0 RPI top 25 wins and maybe 2 or 3 RPI top 50 wins by the end of the year, and likely will be seeded accordingly. Teams with an RPI of 17 (which probably is higher than where the Tigers will end up) have been seeded anywhere from 3 to 9 since 2000, mostly 4 or 5, and I'm not sure I'd expect much different.

I think people have to be careful about looking at Princeton's ranking, too. They're benefitting now from poll logic - they don't lose, while other teams do, so they're likely to rise at least some between now and the tournament. A top-15 ranking (a real possibility) isn't necessarily indicative of how good voters really think Princeton is, particularly since almost nobody will see any Princeton games.

|

|

summertime blues

Joined: 16 Apr 2013

Posts: 7841

Location: Shenandoah Valley

Back to top |

Posted: 02/03/15 5:36 pm ::: Posted: 02/03/15 5:36 pm ::: |

Reply  |

|

| I have rarely thought that Mr. Creme had much idea what he was doing. That, however, is my opinion and one apparently not shared by ESPN, which continues to employ him.

_________________

Don't take life so serious. It ain't nohows permanent.

It takes 3 years to build a team and 7 to build a program.--Conventional Wisdom

|

|

Fighting Artichoke

Joined: 12 Dec 2012

Posts: 4040

Back to top |

Posted: 02/04/15 12:07 pm ::: Posted: 02/04/15 12:07 pm ::: |

Reply  |

|

| summertime blues wrote: |

| I have rarely thought that Mr. Creme had much idea what he was doing. That, however, is my opinion and one apparently not shared by ESPN, which continues to employ him. |

Creme's job is not to produce the best bracket but rather to anticipate the committee's reasoning and predict the bracket they create. He does an excellent job of doing that. I disagree with his far-too-early-top-25 that he releases immediately after the conclusion of the season, but he's quite excellent at bracketology.

|

|

cthskzfn

Joined: 21 Nov 2004

Posts: 12851

Location: In a world where a PSYCHOpath like Trump isn't potus.

Back to top |

Posted: 02/04/15 9:12 pm ::: Posted: 02/04/15 9:12 pm ::: |

Reply  |

|

| To answer the OP (after having seen the PU @ Harvard game), no.

_________________

Silly, stupid white people might be waking up.

|

|

cthskzfn

Joined: 21 Nov 2004

Posts: 12851

Location: In a world where a PSYCHOpath like Trump isn't potus.

Back to top |

Posted: 02/04/15 9:14 pm ::: Posted: 02/04/15 9:14 pm ::: |

Reply  |

|

| To answer the OP (after having seen the PU @ Harvard game), no.

_________________

Silly, stupid white people might be waking up.

|

|

Ladyvol777

Joined: 02 Jul 2013

Posts: 248

Back to top |

Posted: 02/04/15 9:29 pm ::: Posted: 02/04/15 9:29 pm ::: |

Reply  |

|

| I see Princeton no higher then a 4 or 5.

|

|

GlennMacGrady

Joined: 03 Jan 2005

Posts: 8227

Location: Heisenberg

Back to top |

Posted: 02/04/15 9:30 pm ::: Posted: 02/04/15 9:30 pm ::: |

Reply  |

|

| No. |

|

WayneBearCal

Joined: 09 Apr 2014

Posts: 33

Back to top |

Posted: 02/09/15 6:39 pm ::: Posted: 02/09/15 6:39 pm ::: |

Reply  |

|

With the NCAA Selection Committee set to release its preliminary top 20 seeds on Wednesday, here's an update on the "proper" bracket. This ranking is based on games thru 2/8, the same timeframe for ESPN's latest bracketology. (EDIT: After the games of 2/9, I re-checked the top 5 teams and Notre Dame is now #2 and South Carolina #3. I won't change the list below since I want to preserve the comparison to the ESPN bracket.)

The field is listed in S-Curve order, even though I only provided the seeding line. I've also shown ESPN's bracketology seeding in parentheses (note that I use ESPN's seedings before any procedural bumps to best reflect Creme's original S-Curve).

1 (1) Connecticut

1 (1) South Carolina (dropped to 3rd after 2/9)

1 (1) Notre Dame (rose to 2nd after 2/9)

1 (1) Baylor

2 (2) Maryland

2 (2) Tennessee

2 (5) Princeton

2 (4) Louisville

3 (2) Oregon St

3 (2) Florida St

3 (3) Duke

3 (3) Arizona St

4 (3) Iowa

4 (3) Kentucky

4 (4) George Washington

4 (5) North Carolina

5 (6) Syracuse

5 (7) South Florida

5 (4) California

5 (10) FL Gulf Coast

6 (7) James Madison

6 (5) Washington

6 (6) Stanford

6 (5) Rutgers

7 (4) Mississippi St

7 (8) Dayton

7 (8) Oklahoma

7 (6) Texas A&M

8 (6) Nebraska

8 (8) WI Green Bay

8 (9) Texas

8 (8) Chattanooga

9 (7) Ohio St

9 (9) Pittsburgh

9 (9) Northwestern

9 (12) DePaul

10 (7) Georgia

10 (13) Ark Little Rock

10 (11) Michigan

10 (11) W Kentucky

11 (10) Gonzaga

11 (9) Seton Hall

11 (11) Miami FL

11 (10) Minnesota

12 (10) Iowa St

12 (--) Tulane (in ESPN's first four out)

12 (12) Quinnipiac

12 (--) MTSU (in ESPN's first four out)

13 (13) Wichita St (ESPN selected Drake in the MVC)

13 (12) Arkansas

13 (13) Ohio

13 (13) Fresno St

14 (--) South Dakota St (ESPN selected South Dakota in the Summit)

14 (14) Albany NY

14 (--) Long Beach St (ESPN selected Hawaii in the Big West)

14 (14) American Univ

15 (15) Montana

15 (14) Liberty

15 (15) Hampton

15 (16) TN Martin

16 (15) New Mexico St

16 (16) Bryant (ESPN selected Central Conn St in the Northeast)

16 (16) SF Austin

16 (16) TX Southern (ESPN selected Lamar in the Southland)

The committee's preliminary release on Wednesday will list the first four seeds in order and then the next 16 in alphabetical order. Charlie Creme offers a good discussion of the implications of this announcement in this article:

http://espn.go.com/womens-college-basketball/story/_/id/12301142/ncaa-selection-committee-top-20-reveal-tell-us-much

This announcement effectively creates a new type of bubble, focusing on whether teams will either make or miss the top 20. Creme's subtitle identifies teams of notable interest as Princeton, James Madison and George Washington. Princeton is a #2 seed and George Washington a #4 seed in the proper bracket, but of course given traditional biases they are ranked much lower by the committee (with the Tigers being punished more than GW). I agree that both are true bubble teams for the top 20 and will be the most interesting aspect of the announcement. I'm not sure why he included James Madison - they're not in the top 25 of his S-Curve and while they're #21 in this "proper" bracket, they would be rated lower by the committee. I don't even think of JMU as a bubble team for the top 20.

The other disagreements between the proper S-curve and ESPN's braketology won't be an issue on Wednesday. The proper S-curve includes Syracuse, South Florida and Florida Gulf Coast in the top 20, all of whom are unlikely to be considered top 20 teams by the committee. In their place, ESPN and Creme lists Mississippi State, Washington and Rutgers in his top 20, a much better guess as to which conference affiliations will be rewarded in Wednesday's announcement.

_________________

AKA WayneBear at BearInsider.com

Avatar: Paige Bowie, Jennie Leander and Lauren Ashbaugh celebrate after Cal's upset of #9 Colorado State on December 21, 1998

|

|

pilight

Joined: 23 Sep 2004

Posts: 66910

Location: Where the action is

Back to top |

Posted: 02/13/15 11:34 am ::: Posted: 02/13/15 11:34 am ::: |

Reply  |

|

| Something to consider, the Ivy League has a better Conference RPI than the AAC.

_________________

I'm a lonely frog

I ain't got a home

|

|

Fighting Artichoke

Joined: 12 Dec 2012

Posts: 4040

Back to top |

Posted: 02/13/15 12:03 pm ::: Posted: 02/13/15 12:03 pm ::: |

Reply  |

|

| pilight wrote: |

| Something to consider, the Ivy League has a better Conference RPI than the AAC. |

You may want to recheck that. Warren Nolan has AAC 7th and the Ivy 9th. Were you thinking of the Atlantic 10?

http://warrennolan.com/basketballw/2015/conferencerpi

1. Big12

2. ACC

3. SEC

4. B1G

5. PAC12

6. Atlantic 10

7. AAC

8. West Coast

9. Ivy

10. Big East

|

|

pilight

Joined: 23 Sep 2004

Posts: 66910

Location: Where the action is

Back to top |

Posted: 02/13/15 12:22 pm ::: Posted: 02/13/15 12:22 pm ::: |

Reply  |

|

| Fighting Artichoke wrote: |

| pilight wrote: |

| Something to consider, the Ivy League has a better Conference RPI than the AAC. |

You may want to recheck that. Warren Nolan has AAC 7th and the Ivy 9th. Were you thinking of the Atlantic 10?

http://warrennolan.com/basketballw/2015/conferencerpi

1. Big12

2. ACC

3. SEC

4. B1G

5. PAC12

6. Atlantic 10

7. AAC

8. West Coast

9. Ivy

10. Big East |

WBBState and Real Time RPI has the Ivy #7 and the AAC #8.

_________________

I'm a lonely frog

I ain't got a home

|

|

pilight

Joined: 23 Sep 2004

Posts: 66910

Location: Where the action is

Back to top |

Posted: 02/13/15 12:24 pm ::: Posted: 02/13/15 12:24 pm ::: |

Reply  |

|

| Toil and Trouble

_________________

I'm a lonely frog

I ain't got a home

|

|

Fighting Artichoke

Joined: 12 Dec 2012

Posts: 4040

Back to top |

Posted: 02/13/15 2:15 pm ::: Posted: 02/13/15 2:15 pm ::: |

Reply  |

|

| pilight wrote: |

| Fighting Artichoke wrote: |

| pilight wrote: |

| Something to consider, the Ivy League has a better Conference RPI than the AAC. |

You may want to recheck that. Warren Nolan has AAC 7th and the Ivy 9th. Were you thinking of the Atlantic 10?

http://warrennolan.com/basketballw/2015/conferencerpi

1. Big12

2. ACC

3. SEC

4. B1G

5. PAC12

6. Atlantic 10

7. AAC

8. West Coast

9. Ivy

10. Big East |

WBBState and Real Time RPI has the Ivy #7 and the AAC #8. |

I don't trust realtimerpi, as they seem late to update and sort of wonky. Wbbstate is better on their RPI but I've found Nolan to be the best and it updates instantly. Regardless, that's a large discrepancy between Nolan and Wbbstate. If they somehow align by tomorrow, it might be an updating discrepancy, but such a large difference between Wbbstate and Nolan seems odd to me.

|

|

|

|